A few weeks ago, Anthropic gave Claude filesystem access. If you’ve used claude.ai recently, you’ve seen it - Claude can now write files, run Python, execute shell commands.

This wasn’t just a feature. It was a bet on how agents should interact with the world.

If you’re building an agent, you have two paths. Path one: tools. Want the agent to query a database? Build a tool. Search logs? Another tool. Transform data? Tool. Each one needs a schema, validation, error handling. Five actions means five tools. It doesn’t scale.

Path two: give it a terminal. A bash shell is a meta-tool. One interface, infinite capability. The agent inherits everything the OS offers - Python, grep, awk, curl, the entire unix toolkit. Training data is abundant. The mental model is universal.

Anthropic chose path two. But if you give an agent unlimited OS access, you have a problem: containment. The agent can run arbitrary code. That code might be malicious, buggy, or just resource-hungry.

I was building an agent backend and needed to solve this same problem. Before writing any code, I wanted to see how Anthropic does it.

Peeking inside Claude’s sandbox

Here’s the thing about reverse-engineering Claude’s sandbox: Claude is the best tool for the job. I can just ask it to inspect its own environment.

ML

What kernel are you running on? The `runsc` hostname and 2016 date are gVisor's signature. I'm running in a gVisor sandbox, not a regular container or VM.

ML

What are your resource limits? 9GB memory, 4 CPUs. Generous for a sandbox.

ML

How does network access work? All traffic goes through a proxy. The credentials are a JWT. Let me decode it.

The proxy only allows traffic to package registries, GitHub, and Anthropic's API. The token expires in 4 hours. Network access exists, but it's tightly controlled.

ML

What's running as PID 1? PID 1 is a custom binary - not bash, not systemd. It's listening on port 2024 and enforcing a 4GB memory limit. This is the agent executor that spawns shells for each command.

This revealed more than I expected.

Network control via egress proxy. Instead of disabling network entirely, all traffic routes through a proxy that validates JWTs. The token contains an allowlist of hosts (package registries, GitHub, Anthropic API) and expires in 4 hours. Claude has network access - it’s just tightly controlled.

A custom init process. PID 1 isn’t a shell - it’s /process_api, a purpose-built binary that receives commands and enforces resource limits at the application layer.

Running as root inside the sandbox. This surprised me. gVisor’s isolation is strong enough that they don’t bother with a non-root user.

| What I expected | What I found |

|---|

| No network | JWT-authenticated egress proxy |

| Shell as PID 1 | Custom /process_api binary |

| Non-root user | Root (uid=0) |

The image is ~7GB with ffmpeg, ImageMagick, LaTeX, Playwright, LibreOffice - everything for file processing. For my use case, a minimal ~200MB image is enough.

The options

Firecracker is what AWS uses for Lambda. MicroVMs that boot in ~125ms with ~5MB memory overhead. True VM-level isolation. The catch: it needs direct KVM access. Standard Kubernetes nodes are themselves VMs - Firecracker won’t run inside them without bare metal instances. Operationally complex.

gVisor intercepts syscalls in userspace. Your container gets its own “kernel” - really a Go program pretending to be a kernel. It works anywhere Docker runs. Google uses this for Cloud Run and GKE Sandbox. Simpler to operate, slightly more syscall overhead.

Plain Docker shares the kernel with the host. Container escapes are rare but real. For untrusted code, that’s not enough.

Anthropic chose gVisor. So did I.

The sandbox image

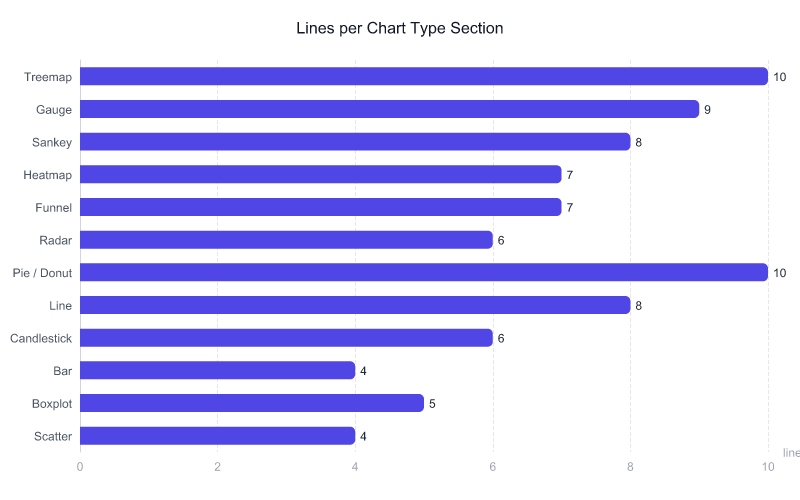

First, what goes in the container:

FROM python:3.13-slim-bookworm

RUN apt-get update && apt-get install -y --no-install-recommends \

coreutils grep sed gawk findutils \

curl wget git jq tree vim-tiny less procps \

&& rm -rf /var/lib/apt/lists/*

RUN pip install --no-cache-dir aiohttp

RUN mkdir -p /mnt/user-data/uploads \

/mnt/user-data/outputs \

/workspace

COPY process_api.py /usr/local/bin/process_api

WORKDIR /workspace

EXPOSE 2024

CMD ["/usr/local/bin/process_api", "--addr", "0.0.0.0:2024"]

Python, standard unix utils, and a directory structure that mirrors Claude’s. The key addition is process_api - an HTTP server that runs as PID 1 and handles command execution. No non-root user - gVisor provides the isolation boundary, not Linux permissions.

Container lifecycle

Three options for when containers live and die:

Pre-warmed pool: Keep N containers running idle, grab one when needed. ~10-50ms latency. But you’re managing a pool, handling assignment, dealing with cleanup. Complex.

Per-execution: New container for each command. Simplest code. ~600ms-1.2s cold start every time. Too slow.

Session-scoped: Container lives for the user session. Cold start once, then instant for every subsequent execution.

I went with session-scoped. The initial cold start (~500ms) hides behind LLM inference anyway - users are already waiting for the agent to think. By the time it responds, the container is warm.

class SandboxManager:

def __init__(

self,

image_name: str = "agentbox-sandbox:latest",

runtime: str = "runsc",

storage_path: Optional[Path] = None,

proxy_host: Optional[str] = None,

proxy_port: int = 15004,

):

self.docker_client = docker.from_env()

self.image_name = image_name

self.runtime = runtime

self.storage_path = storage_path

self.proxy_host = proxy_host

self.proxy_port = proxy_port

self.sessions: dict[str, SandboxSession] = {}

async def create_session(

self,

session_id: str,

tenant_id: Optional[str] = None,

allowed_hosts: Optional[list[str]] = None,

) -> SandboxSession:

# Default allowed hosts for pip, npm, git

hosts = allowed_hosts or ["pypi.org", "files.pythonhosted.org", "github.com"]

# Create tenant storage if configured

volumes = {}

if tenant_id and self.storage_path:

tenant_dir = self.storage_path / tenant_id

(tenant_dir / "workspace").mkdir(parents=True, exist_ok=True)

(tenant_dir / "outputs").mkdir(parents=True, exist_ok=True)

volumes = {

str(tenant_dir / "workspace"): {"bind": "/workspace", "mode": "rw"},

str(tenant_dir / "outputs"): {"bind": "/mnt/user-data/outputs", "mode": "rw"},

}

# Generate proxy URL with JWT-encoded allowlist

proxy_url = self._generate_proxy_url(session_id, tenant_id, hosts)

container = self.docker_client.containers.run(

self.image_name,

detach=True,

name=f"sandbox-{session_id[:8]}",

runtime=self.runtime,

mem_limit="4g",

cpu_period=100000,

cpu_quota=400000, # 4 CPUs

security_opt=["no-new-privileges"],

ports={"2024/tcp": None}, # Map process_api port

environment={

"HTTP_PROXY": proxy_url,

"HTTPS_PROXY": proxy_url,

},

volumes=volumes,

)

session = SandboxSession(session_id, container, tenant_id, hosts)

self.sessions[session_id] = session

return session

The key insight from Claude’s architecture: network isn’t disabled, it’s controlled. All traffic routes through an egress proxy that validates requests against an allowlist.

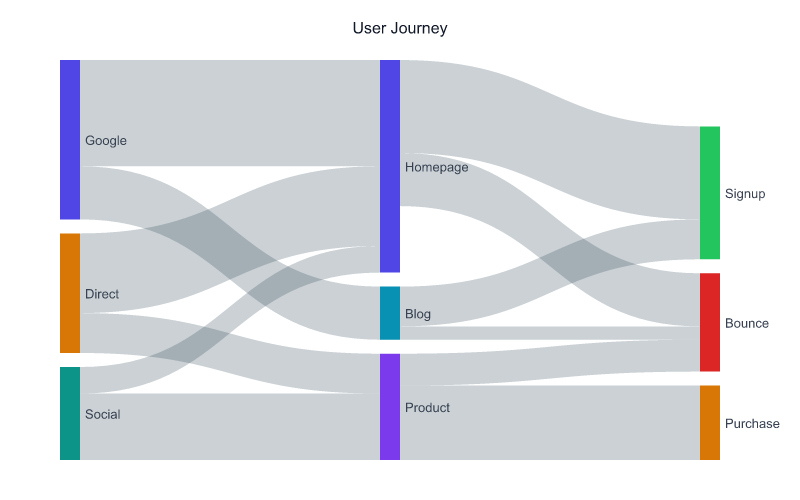

Defense in depth

Four layers of isolation:

gVisor runtime - The primary boundary. Syscalls are intercepted by a userspace kernel written in Go. Even if code escapes the container, it’s running against gVisor, not your host. This is why Claude can run as root - “root” inside gVisor has no privileges outside it.

Egress proxy with allowlist - All outbound traffic routes through a proxy that validates requests. The sandbox can reach pypi.org, github.com, npm - but nothing else. No exfiltration to arbitrary hosts. The proxy authenticates requests with short-lived JWTs that encode the allowed hosts.

Resource limits - 4GB memory, 4 CPUs. A runaway process can’t starve the host. The init process can enforce additional limits at the application layer.

Filesystem mounts - Only /workspace and /mnt/user-data/outputs are writable. User uploads mount read-only. The sandbox can’t modify its own image or persist changes outside designated paths.

The egress proxy

The egress proxy is the clever part of this architecture. Instead of disabling network and dealing with the pain of pip install, you control where traffic can go.

The proxy validates each request against an allowlist encoded in a JWT:

def _generate_proxy_url(

self,

session_id: str,

tenant_id: Optional[str],

allowed_hosts: list[str],

) -> str:

"""Generate proxy URL with JWT-encoded allowlist."""

payload = {

"iss": "sandbox-egress-control",

"session_id": session_id,

"tenant_id": tenant_id,

"allowed_hosts": ",".join(allowed_hosts),

"exp": int((datetime.now(timezone.utc) + timedelta(hours=4)).timestamp()),

}

# Sign with HMAC-SHA256

header_b64 = base64.urlsafe_b64encode(json.dumps({"typ": "JWT", "alg": "HS256"}).encode()).rstrip(b"=").decode()

payload_b64 = base64.urlsafe_b64encode(json.dumps(payload).encode()).rstrip(b"=").decode()

signature = hmac.new(self.signing_key.encode(), f"{header_b64}.{payload_b64}".encode(), hashlib.sha256).digest()

signature_b64 = base64.urlsafe_b64encode(signature).rstrip(b"=").decode()

token = f"{header_b64}.{payload_b64}.{signature_b64}"

return f"http://sandbox:jwt_{token}@{self.proxy_host}:{self.proxy_port}"

The proxy (a simple HTTP CONNECT proxy with JWT validation) checks each request:

async def handle_connect(self, request: web.Request) -> web.StreamResponse:

"""Handle HTTPS CONNECT requests."""

target = request.path_qs # host:port

host, port = target.rsplit(":", 1) if ":" in target else (target, 443)

# Extract and verify JWT from Proxy-Authorization header

allowed_hosts = self._get_allowed_hosts(request)

if not self._is_host_allowed(host, allowed_hosts):

return web.Response(status=403, text=f"Host not allowed: {host}")

# Connect to target and pipe data bidirectionally

reader, writer = await asyncio.open_connection(host, int(port))

# ... bidirectional pipe between client and target

This solves the pip problem elegantly. The agent can pip install requests because pypi.org is in the allowlist. But it can’t exfiltrate data to evil.com.

Streaming output

Users want to see output as it happens, not wait for completion. Each container runs process_api as PID 1 - an HTTP server that handles command execution. For streaming, it uses Server-Sent Events:

async def exec_stream(

self,

session_id: str,

command: str,

workdir: str = "/workspace",

) -> AsyncIterator[dict]:

"""Execute a command and stream output via process_api SSE."""

session = self.sessions.get(session_id)

if not session:

yield {"type": "error", "data": "Session not found"}

return

async with httpx.AsyncClient() as client:

async with client.stream(

"POST",

f"{session.api_url}/exec/stream",

json={"command": command, "workdir": workdir},

) as response:

async for line in response.aiter_lines():

if line.startswith("data: "):

yield json.loads(line[6:])

The init process inside the container handles the actual execution and streams stdout/stderr as SSE events. This is the same pattern Claude uses - PID 1 is a purpose-built binary that spawns shells for each command.

What it looks like from inside

$uname -r

4.4.0 runsc

gVisor, not host kernel

$whoami

root

root inside sandbox, no privileges outside

HTTP/1.1 200 OK

allowlisted host works

HTTP/1.1 403 Forbidden - Host not allowed

egress proxy blocks unlisted hosts

$ls /

workspace mnt usr bin …

full filesystem, writes restricted to /workspace

↓/workspace mounts to /data/tenants/{id}/workspace on host

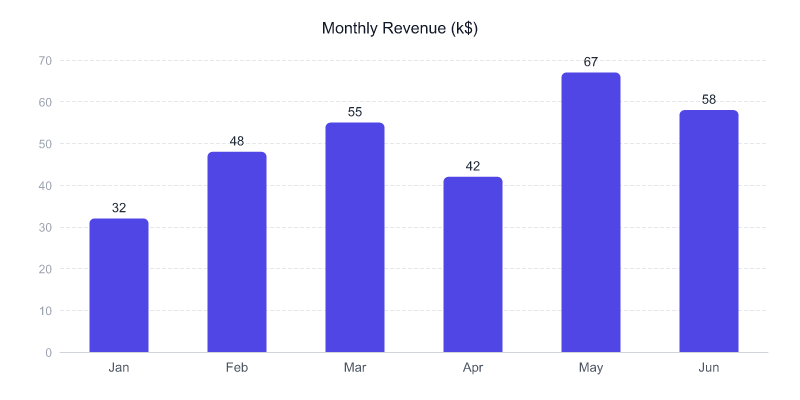

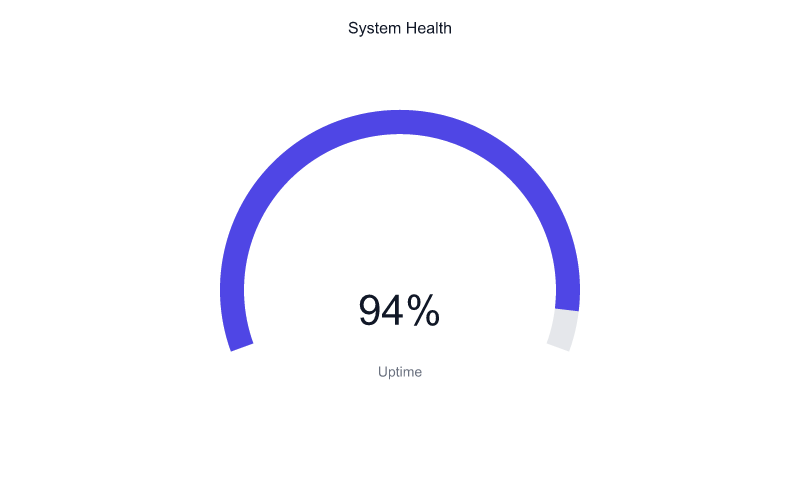

Benchmarks

$python benchmark.py

Metric Value

------------------------------------------------------------

Cold Start (median) 439.28 ms

Cold Start (p95) 594.95 ms

Exec Latency (median) 3.45 ms

Exec Latency (p95) 8.52 ms

Memory per Session 24.6 MB

Latency @ 5 sessions 9.00 ms

Latency @ 10 sessions 13.10 ms

Cold start under 500ms median - faster than I expected. The p95 of ~600ms is the outlier you hit on first run when layers aren’t cached. Command execution at 3.5ms median is negligible. Memory overhead of 25MB per session means you can run ~40 concurrent sessions per GB of RAM.

The interesting number is concurrent scaling: latency increases from 9ms to 13ms as you go from 5 to 10 sessions. Linear enough that you won’t hit a wall.

Trade-offs I accepted

No container pooling. Pre-warmed pools give you ~10-50ms latency instead of ~500ms. But session-scoped is simpler and the cold start hides behind LLM inference. I’ll add pooling when latency actually becomes a problem.

No snapshot/restore. Firecracker can snapshot a running VM and restore in 5-25ms. gVisor doesn’t support this. If I ever need sub-second container startup, I’ll revisit Firecracker and accept the operational complexity.

Egress proxy is a separate process. The JWT-based proxy runs alongside your application. For a simple setup, network_mode: none is easier. But it’s worth it - agents that can’t pip install are significantly less useful.

gVisor’s syscall overhead. Some workloads see 2-10x slowdown on syscall-heavy operations. For “run Python scripts and shell commands” this is negligible. For high-frequency I/O, you’d notice.

No GPU support. gVisor has experimental GPU passthrough, but I haven’t needed it. When I do, this gets more complicated.

The punchline

Firecracker is technically superior. Faster boot, true VM isolation, snapshot/restore. But it requires KVM access, which means bare metal or nested virtualization. For most teams running on standard cloud infrastructure, that’s a non-starter.

gVisor is the practical choice. It works in standard Kubernetes, standard Docker, anywhere containers run. Google trusts it for Cloud Run. Anthropic trusts it for Claude. The isolation is strong enough to run as root inside the sandbox.

The pattern I learned from reverse-engineering Claude’s sandbox: gVisor as the hard security boundary, an egress proxy for network control instead of disabling it entirely, and session-scoped containers that hide cold start behind LLM inference latency.

If you’re building agents that execute code, you need something like this. The alternative - running untrusted code on your host - is not an option.

The code is available at github.com/Michaelliv/agentbox.